We introduce the novel task of interactive scene exploration, wherein robots autonomously explore environments and produce an action-conditioned scene graph (ACSG) that captures the structure of the underlying environment. The ACSG accounts for both low-level information (geometry and semantics) and high-level information (action-conditioned relationships between different entities) in the scene. To this end, we present the Robotic Exploration (RoboEXP) system, which incorporates the Large Multimodal Model (LMM) and an explicit memory design to enhance our system's capabilities. The robot reasons about what and how to explore an object, accumulating new information through the interaction process and incrementally constructing the ACSG. Leveraging the constructed ACSG, we illustrate the effectiveness and efficiency of our RoboEXP system in facilitating a wide range of real-world manipulation tasks involving rigid, articulated objects, nested objects, and deformable objects.

Low-level Memory

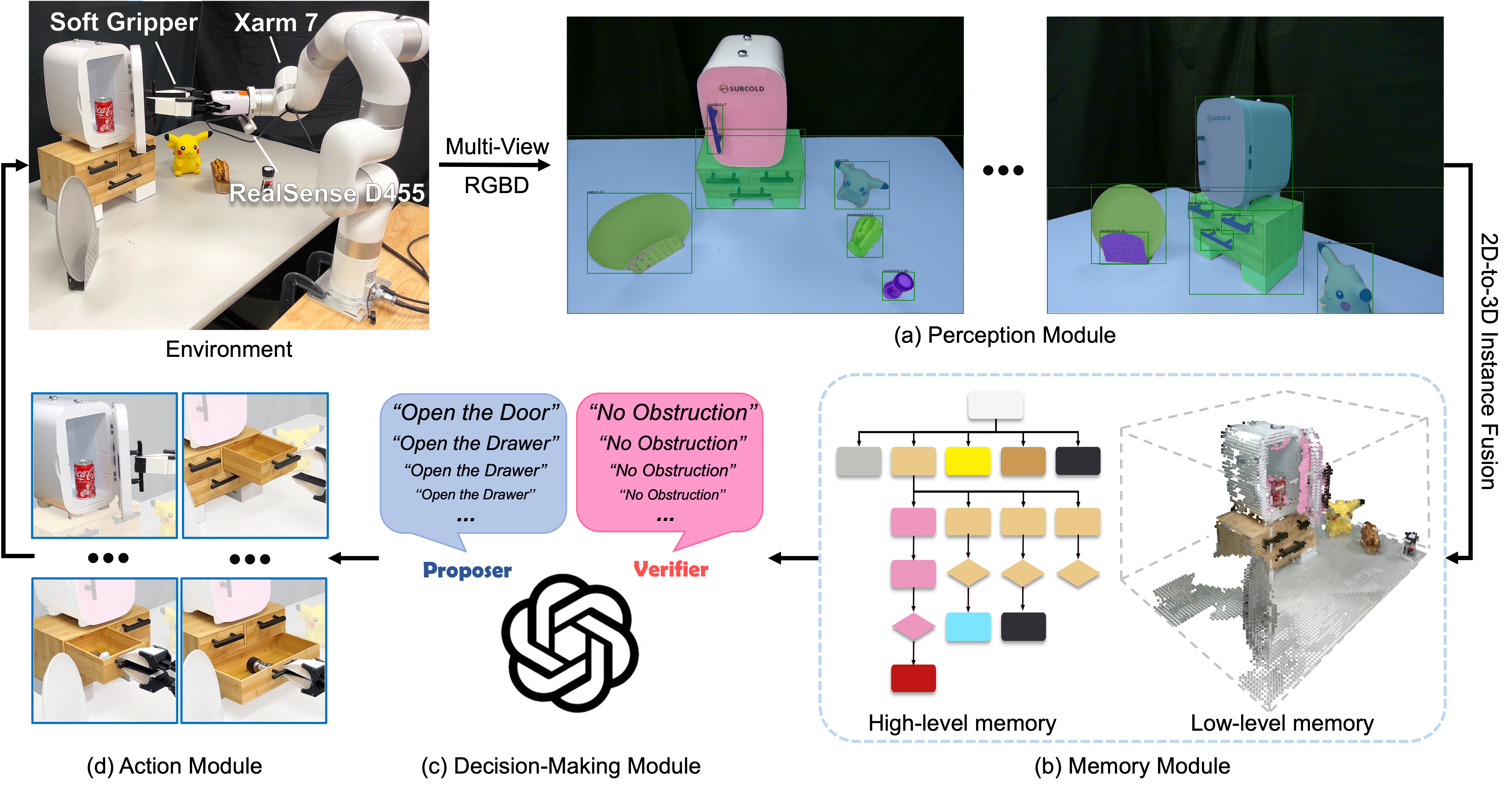

We formulate interactive exploration as an action-conditioned 3D scene graph (ACSG) construction and traversal problem. Our ACSG is an actionable, spatial-topological representation that models objects and their interactive and spatial relations in a scene, capturing both the high-level graph (c) and corresponding low-level memory (b).

Our RoboEXP system comprises four modules. With RGB-D observations as input, our perception module (a) and memory module (b) construct our ACSG leveraging the vision foundation models and our explicit memory design. The ACSG is then utilized by the decision-making module (c) to generate exploration plans, and the action module (d) executes them.

Our RoboEXP system is capable of handling human interventions during the exploration process. Our system can automatically detect new objects and explore them when necessary. Additionally, our system can also track hand position to identify the areas that need to be reexplored.

Intervention: A person positions a cabinet on the table.

Our interactive exploration pipeline can be deployed on the Stretch robot for household scenarios.

A mobile robot explores the drawers to see what's inside them.

We display more results with varied numbers of objects, types, and layouts in our experimental settings.

@article{jiang2024roboexp,

title={RoboEXP: Action-Conditioned Scene Graph via Interactive Exploration for Robotic Manipulation},

author={Jiang, Hanxiao and Huang, Binghao and Wu, Ruihai and Li, Zhuoran and Garg, Shubham and Nayyeri, Hooshang and Wang, Shenlong and Li, Yunzhu},

booktitle={CoRL},

year={2024}

}